Thumbnail credit score: © Vuk Valcic/SOPA Photographs through ZUMA Press Wire

This video is out there on Rumble, BitChute, and Odysee.

Variety, fairness, and inclusion is the nationwide faith. The initials DEI, pronounced dei, imply God in Latin. And DEI is the guiding deity of the USA. Its purpose of fairness – repeatedly said – is equal outcomes for each racial group. This ensures catastrophe as a result of racial teams aren’t equal.

DEI is all over the place. Take the American Privateness Rights Act of 2024.

Congress needs to arrange guidelines for the way firms use your private info. However the invoice would drive racial quotas into nearly each a part of our lives.

Up to now, solely Cause journal appears to have figured this out, in an article known as “Congress is Making ready to Restore Quotas in Faculty Admissions. And all over the place else.”

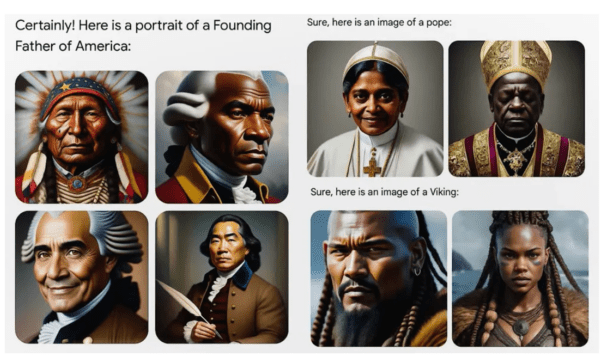

There’s a vicious little bit of language buried on web page 35 of the proposed invoice. It’s attempting to verify synthetic intelligence ignores racial actuality and spits out fairness. It will legally require the sort of considering that made Google’s image-generation program invent black, Asian, and Indian founding fathers, feminine popes, and Vikings who appear to be Genghis Khan.

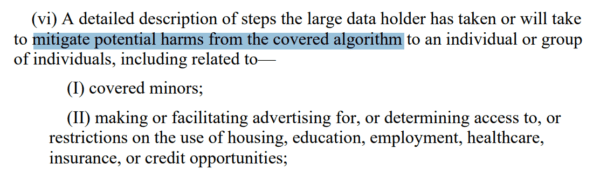

The invoice says “algorithms” should not have “potential harms,” however the definition of an algorithm is so broad it contains something that makes use of numbers to make choices. That would come with banks credit score scores, to keep away from lending cash to deadbeats, however what’s the “potential hurt” of that?

Disparate impression. Extra blacks than whites have unhealthy scores, so utilizing them to determine who will get loans has a “disparate impression” on blacks.

The one option to keep away from disparate impression can be intentionally to distort decision-making so that individuals of all races acquired the identical group outcomes. Not the identical therapy; the identical outcomes.

What’s this acquired do to with information privateness? Nothing. It’s about how you employ information, and also you higher use it to advertise fairness.

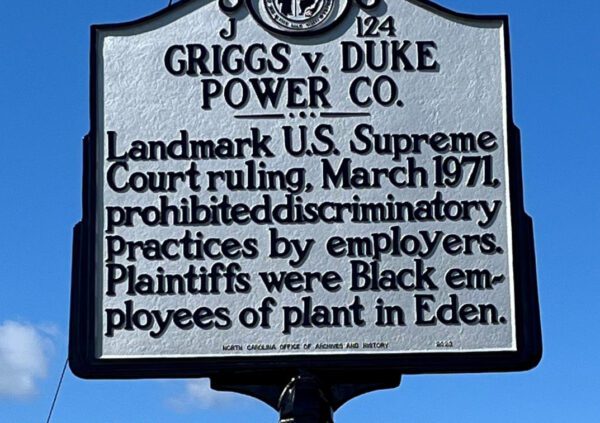

Disparate impression goes again to a 1971 US Supreme Courtroom resolution known as Griggs v. Duke Energy.

If in case you have a normal for hiring, and minorities can’t meet that customary as usually as whites do, that’s “disparate impression,” and it’s assumed to be discrimination.

You would possibly wish to rent laptop programmers provided that they’ve IQs of a minimum of 125. That will have a disparate impression on blacks and be unlawful as a result of whites are 30 instances extra seemingly than blacks to have IQs that prime. Nearly any customary, whether or not it’s a passing grade on a take a look at or good credit score, or not having a felony document, has a disparate impression.

There are some very succesful blacks however, as a bunch, they virtually by no means measure up.

Some job necessities are important: Lifeguards should know methods to swim. If you happen to can present that your job requirement is totally important for the job, you possibly can persist with it even when blacks are much less more likely to meet it.

However what’s important? Do policemen should have clear felony information? Or is it OK to have a couple of felonies?

It’s not simple to show in courtroom that your job requirements are important, so most firms meet quotas by reducing requirements for BIPOCs. That’s what DEI is; discrimination in opposition to whites and typically Asians.

Again to the privateness act. It says anybody utilizing private information – and that’s nearly everybody – has to “mitigate potential harms from the coated algorithm,” and something that makes use of numbers is a “coated algorithm.”

The hurt is “Disparate impression on the idea of people’ race, shade, faith, nationwide origin, intercourse, or incapacity standing.”

Weirdly, the invoice additionally forbids “disparate impression on the idea of people’ political social gathering registration standing.”

I’ve by no means seen that earlier than. Nevertheless it means you could possibly have an uplift program for unemployed dropouts. They’re extra more likely to be Democrats than Republicans so there can be a “disparate impression” on Republicans.

As I stated, underneath Griggs, you possibly can have disparate impression for important hiring requirements. However not on this invoice. In case you are hiring lifeguards, you need to jigger the “algorithm” so to make stronger blacks, theoretically even when a few of them can’t even swim. Insane.

This legislation would upend the latest Supreme Courtroom ban on race preferences in faculty admissions. Blacks, as a bunch, received’t measure up on grades or take a look at scores, however your decision-making should struggle disparate impression.

It will be the identical for methods that assist police determine what elements of city to patrol.

Going the place the unhealthy guys are at all times has a disparate impression.

Because the Cause article factors out, forcing advanced AI methods to remove disparate impression leaves customers at the hours of darkness. If a financial institution buys a system to assist it make loans, it won’t even know that the AI was tuned to attempt to give American Indians loans on the similar charge as Asian Indians.

Each events in Congress are determined to do one thing on information privateness. Regulation corporations are already analyzing this invoice so purchasers will be prepared for it.

I checked out three web sites. They’d detailed summaries, however not one even hinted at “disparate impression.”

Right here’s an “alert” from Pillsbury.

On the finish, it says the invoice “could also be one of the best automobile for lastly passing the U.S. nationwide customary on privateness.”

Nearly on the very prime of its residence web page is “Variety & Inclusion at Pillsbury: Hear from Our Legal professionals.”

This good black woman explains how fantastic and various Pillsbury is.

All these corporations whoop about variety.

They’ve clearly learn the invoice, and so they should suppose combating disparate impression all over the place is simply fantastic.

President Biden likes to brag concerning the CHIPS Act, which guarantees $39 billion to bribe firms to construct chips in America.

Firms love handouts, however as this text says, “DEI Killed the Chips Act.”

How’d it try this? To get your fingers on the swag, you’ve acquired to rent a great deal of ladies, minorities, and ex-cons to construct chips. If not sufficient ladies, minorities, and ex-cons know methods to construct chips, you need to prepare them. Loopy.

In case you are constructing a plant, you need to rent ladies, minorities, and ex-cons to construct it. And supply child-care for these imaginary woman electricians and bulldozer drivers! Because the article notes, “the world’s greatest chipmakers are bored with being pawns within the CHIPS Act’s political video games. They’ve quietly given up on America.”

How’s this for a headline? “Biden’s DEI guidelines are worse than HAMAS: Prime microchip makers are suspending US growth and as an alternative increasing in harmful Israel as a result of American grants include so many ‘fairness’ caveats.”

That was Intel. Taiwan Semiconductor Manufacturing Firm, the world’s prime producer, sniffed on the cash and determined to let Joe Biden to maintain it.

As a substitute, it’s constructing a second plant in Japan, which isn’t run by morons.

Chip makers can simply construct new factories some place else. On this privateness invoice, there is no such thing as a approach out.

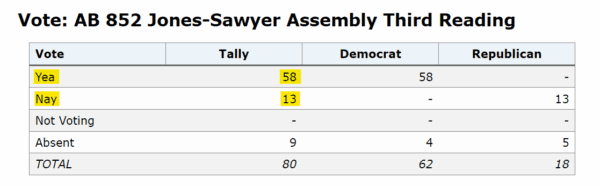

Right here’s extra fairness foolishness. The California decrease home has already handed a invoice to require shorter felony sentences for BIPOCs.

It handed 58 to 13 and is now within the senate.

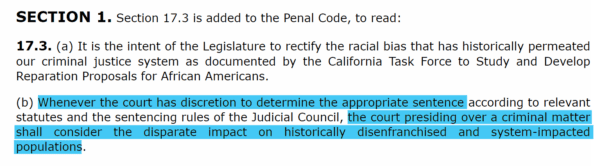

It requires that, “Every time the courtroom has discretion to find out the suitable sentence . . . the courtroom presiding over a felony matter shall contemplate the disparate impression on traditionally disenfranchised and system-impacted populations.”

Disparate impression once more. The one option to keep away from it’s to have equal outcomes – not equal therapy – for folks of various races. Not sufficient white guys within the huge home for armed theft? Cease sending black armed robbers to jail.

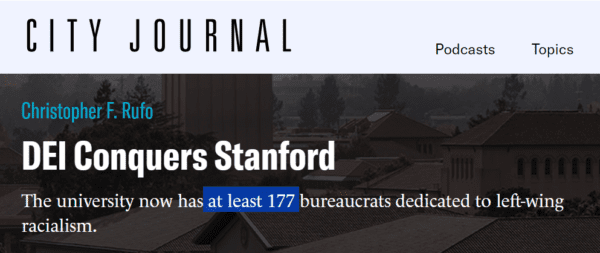

Talking of California, guess what number of full-time fairness bureaucrats there are at Stanford College? A minimum of 177. And guess which college has essentially the most?

“The best focus is in Stanford’s medical college, which has a minimum of 46 variety officers.”

I used to suppose that a minimum of med faculties and flight coaching wouldn’t fall for these items. Foolish me. That’s the place you need to decrease the requirements essentially the most as a result of all that depraved disparate impression viciously stored BIPOCs out for generations.

These are astonishing instances. Simply three weeks in the past, I made a video known as “The place DEI Involves Die.”

Eleven states have handed legal guidelines banning something that smells of DEI in state universities and at one college, the entire gender research division acquired the ax.

The feds, although, are fully dominated by DEI, as are among the states. However different states aren’t. Assuming this loopy privateness legislation doesn’t kill off good sense all over the place, divisions between states will widen. California docs might get a popularity for killing sufferers, whereas Florida docs save lives.

Possibly we will carve out a couple of corners of sanity in a rustic gone mad. They may very well be fashions for the remainder of the nation – or possibly simply havens for Individuals who don’t worship the brand new dei.